New paper: Computer vs humans as a source of information in teaching environments. Who do we trust more?

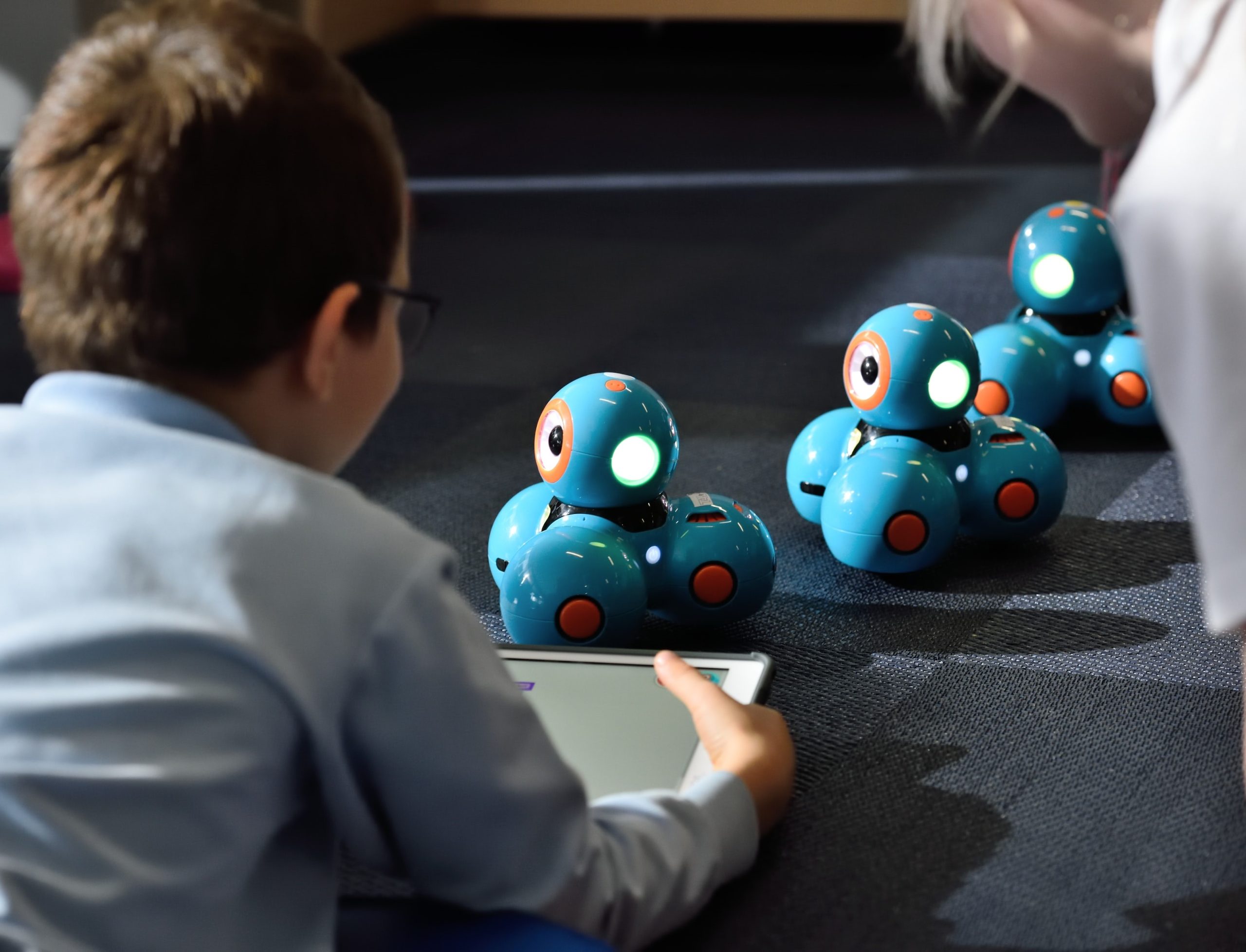

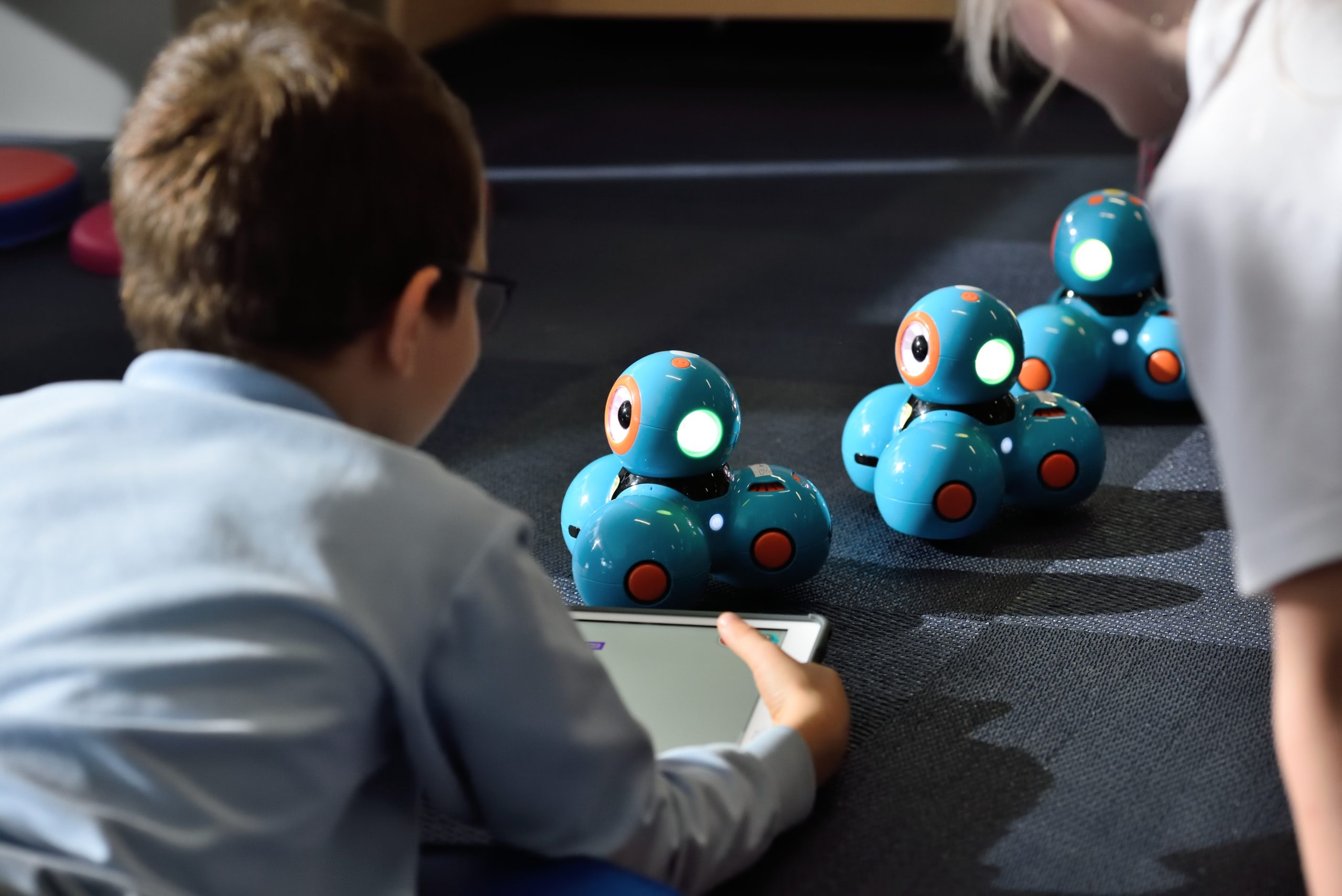

Although we often don’t notice, we interact with robots on a daily basis: from the popular “virtual home assistants” answering our questions about the weather to the navigator apps guiding us around our cities, computer voices accompany us wherever we go. But how do our mind really perceive them? Do we really trust them? A study conducted by Nicolas Spatola, Johann Chevalère, and Rebecca Lazarides from Science of Intelligence and University of Potsdam shows that when humans are asked to solve a difficult task where they need help or hints, hints perceived as human-provided have more positive different effect on performance and on motivation compared to computer-provided help, but this may change based on the individuals’ motivation to achieve their goal. The study, published in Paladyn, Journal of Behavioral Robotics, is part of the Science of Intelligence research project ‘From understanding learners’ adaptive motivation and emotion to designing social learning companions,’ headed by cluster members Rebecca Lazarides (University of Potsdam), Verena Hafner (HU Berlin) and Niels Pinkwart (HU Berlin). The project aims to understand the key components of social interaction between humans and artificial agents, and the present experiment shows the importance of considering psychosocial and motivational aspects in human-robot interactions, especially in teaching environments.

Human-robot interactions have been the object of research for decades, encompassing artificial intelligence, robotics, natural language understanding, and psychology. This study investigated one fundamental aspect that is often neglected in psychology and educational sciences: how the source of information, either human or computer, influences its perceived reliability and the associated performance, and how it may be modulated by motivational processes.

Human and computer-based hints

The researchers asked 100 participants to carry out a task where they had to solve a logical sequence by choosing among several proposed answers. When they failed, they were given the opportunity to receive a hint that would help them solve the task. “The hint was either perceived as emanating from a computer or as emanating from a human teacher. However, unbeknownst to them, there was no real computer program or a human behind.” The message was identical across conditions except for the segment informing about the nature of the agent at the beginning of the experiment. “Our goal was, first, to understand if the perceived nature of the imaginary agent had an effect on performance and motivation,” says Johann Chevalère, one of the authors of the study. “When the hint was perceived as human, performance was better, especially in the difficult tasks.” This shows that participants trusted humans more, but why is that? “When you are presented with a difficult task, you engage more cognitive resources to solve the task; the larger the cognitive resources you engage, the fewer resources you have left to analyze the sources of information. This shows that you will rely on more stereotypical reasoning and on the fact that bots are less trustworthy than humans, a commonly shared stereotype,” explained Chevalère.

Adding motivation to the picture

The researchers also wanted to go a little further: “In order to hopefully replicate results, we decided to perform another experiment, increasing sample size to nearly 1000 participants and also assessing achievement goal’s orientations.” Through a questionnaire handed out after the task, the researchers asked the participants what their goal had been while carrying out the task (which was the same task as in experiment 1) aiming to find out whether this goal affected the results. More in particular, participants were asked to indicate how true a series of statements were for them: Were they motivated merely by their desire to just finish the task? Were they aiming to improve their performance compared to their previous performances? Or were they carrying out the task with the urge to do better than others (a goal known as the “others-related goal”)?

Social needs matter

This second experiment delivered very interesting results: “We found that there is an interaction between what the participants aim to achieve (their goal orientation) and the influence of the perceived source on performance,” said Chevalère. This emerged because on average, the participants who declared their motivation had been “to perform better than others” actually delivered a higher performance after receiving the perceived “computer-provided” cue: a paradoxical result compared to that of the previous experiment. “Some people rely more on the comparison with others, which is a very human character. We believe that for these people, receiving the human cue triggers a need for relatedness (i.e. the level of social connectedness one needs),” explained Chevalère. “This suggests that receiving human feedback might increase an orientation towards others in performance-related situations, which in turn can reduce performance in cognitively challenging situations,” clarified Rebecca Lazarides. Participants’ need for relatedness triggers thoughts about comparison, expectation and competitiveness that reduce the cognitive resource that are needed to accurately solve a difficult task. The feedback from the imaginary computer-agent seems like a better choice for these comparison-oriented people because it may prevent these parasitizing thoughts and the resulting cognitive resource depletion. Therefore, assessing the goal orientation of participants made it possible to add a nuance to the evidences from the first study, that did not take different motivations into account.

So, what does this imply? According to Nicolas Spatola, first author of the study, these studies highlight, among other things, that it is important to consider users’ subjective perceptions when introducing artificial agents in their environment. This is even more relevant in the context of education. Using artificial agents as tutors for learning is a promising innovation for education. However, it is crucial to not limit these technologies to a performances’ adaptation but also take into account students’ subjective perception and motivational beliefs. While challenging, this approach is an open door for interdisciplinarity in sciences.

Publication:

Spatola, N., Chevalère, J., & Lazarides, R. (2021). Human vs computer: What effect does the source of information have on cognitive performance and achievement goal orientation? Paladyn, Journal of Behavioral Robotics, 12(1), 175–186.

https://doi.org/10.1515/pjbr-2021-0012