Now, a team from SCIoI, the Robotic Interactive Perception Lab at TU Berlin and the GRASP Lab at the University of Pennsylvania has taken on this challenge with a bold approach: forget the camera frames altogether.

Their new method, ETAP (Event-based Tracking of Any Point), uses a radically different kind of vision sensor—event cameras—to track motion where conventional cameras simply can’t.

How it works: Seeing motion differently

Event cameras don’t capture still images at a fixed rate like ordinary cameras. Instead, they respond to changes in brightness at each pixel individually, and do so with microsecond precision. What they produce is a continuous stream of ‘events’ rather than a sequence of full images.

This means they don’t suffer from motion blur or overexposure—two things that make standard video-based tracking fall apart.

But it also means developing algorithms that can understand and interpret this unique data format.

That’s where ETAP comes in.

“We’re the first to tackle TAP using only event data,” says first author and SCIoI member Friedhelm Hamann. “We show that even in fast and chaotic scenes, where conventional vision fails, event cameras combined with the right learning methods can keep track.”

The science behind ETAP

At its core, ETAP is a deep learning-based system that can track multiple arbitrary points—anywhere in a scene—over time, using only the information from event cameras.

To make this possible, the researchers introduced a contrastive learning approach inspired by models like OpenAI’s CLIP. The idea is to teach the system to recognize when two signals—possibly generated under very different motion conditions—actually come from the same visual feature. It’s a smart workaround for one of the big challenges in event data: the fact that how something looks depends on how it moves.

The team also developed a custom synthetic dataset called EventKubric, which combines physics-based rendering with realistic camera motion and event simulation. This allowed them to train ETAP entirely in a simulated world—no expensive manual labeling needed.

The results: Outperforming the state of the art

In benchmark tests, ETAP doesn’t just hold its own—it dominates. On one standard for feature tracking, it performs 20% better than previous event-only methods, and even beats the best systems that use both video and event data by over 4%.

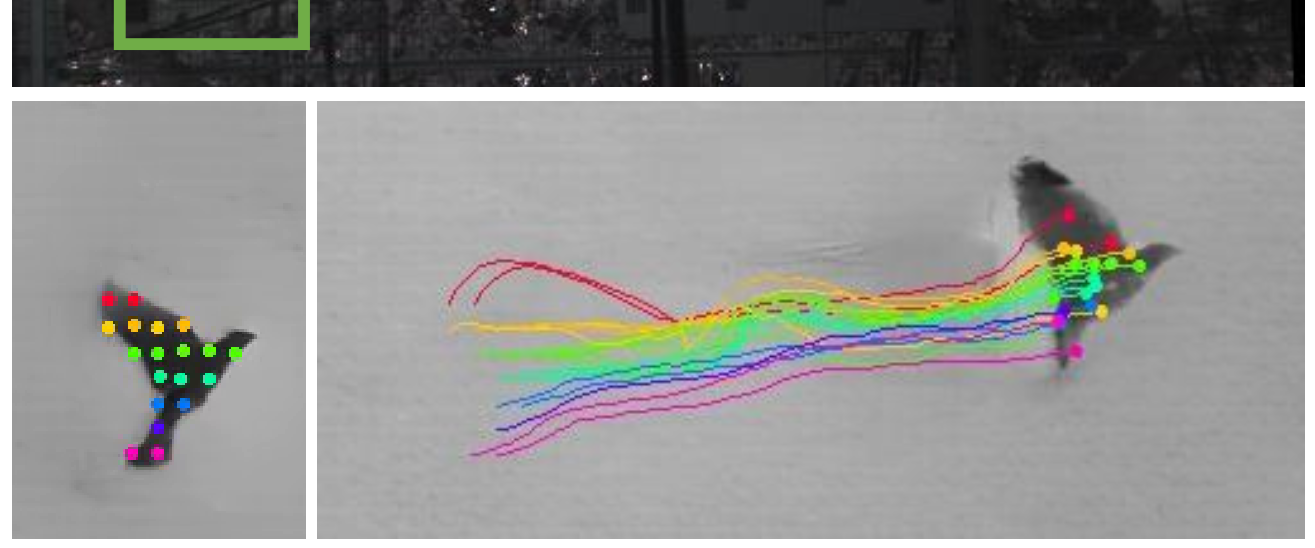

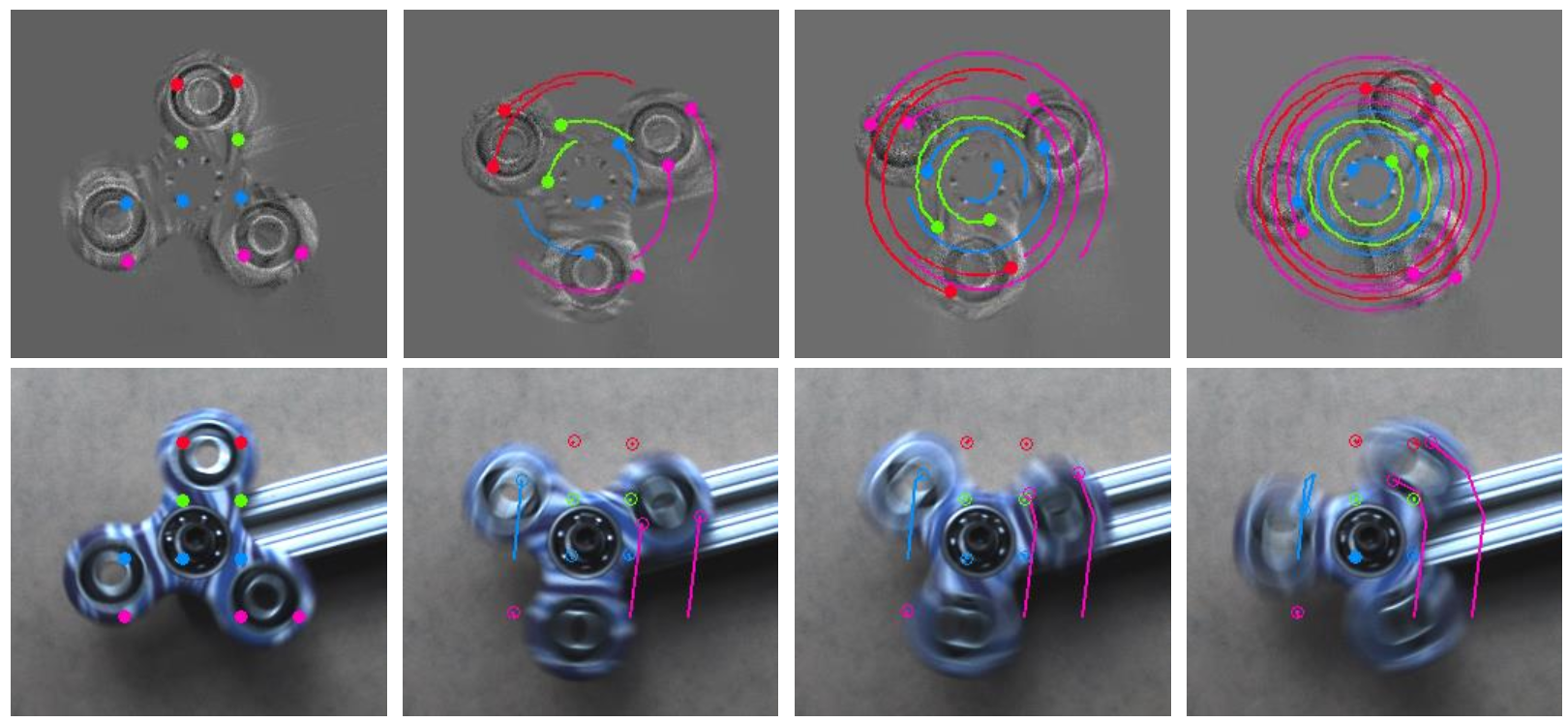

In one particularly vivid test, ETAP tracked points on a spinning fidget spinner in low light, where traditional cameras could barely produce usable frames. ETAP, using only events, kept tracking the spinner with striking accuracy.

Applications: From robots to birds in flight

What can this do in the real world?

Plenty.

- In robotics, ETAP could help autonomous drones or agile robots navigate fast-changing environments, even in the dark or through glare.

- In sports science, it could capture fine motion details of athletes in action without expensive high-speed cameras.

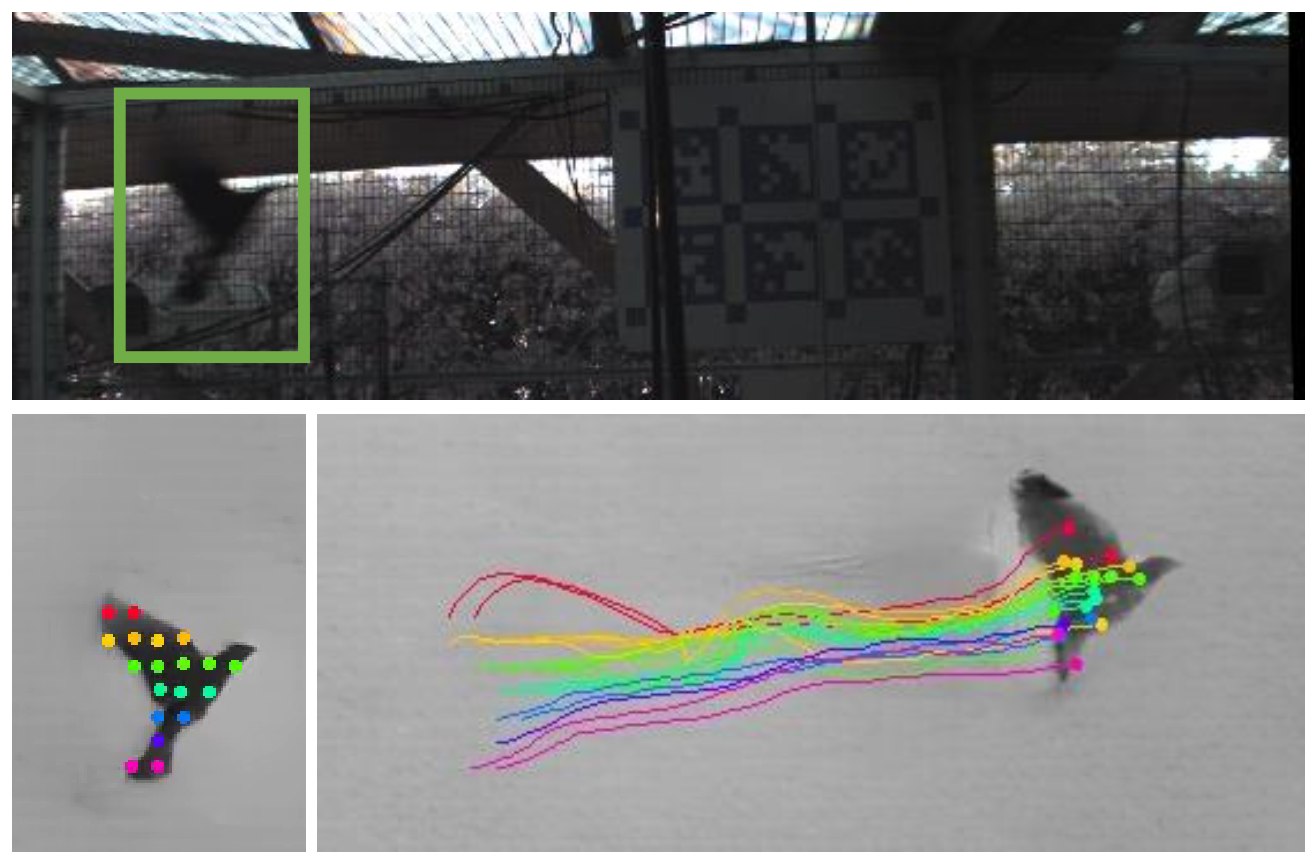

- And for biology, a core focus of SCIoI, ETAP opens up new possibilities for behavioral tracking.

“In SCIoI, we often study animal behavior,” explains SCIoI PI Guillermo Gallego. “We recorded birds in flight, which are fast, highly deformable, and often in challenging lighting. Event cameras combined with ETAP allowed us to track these birds more precisely than any video-based method we’ve tried.”