- This event has passed.

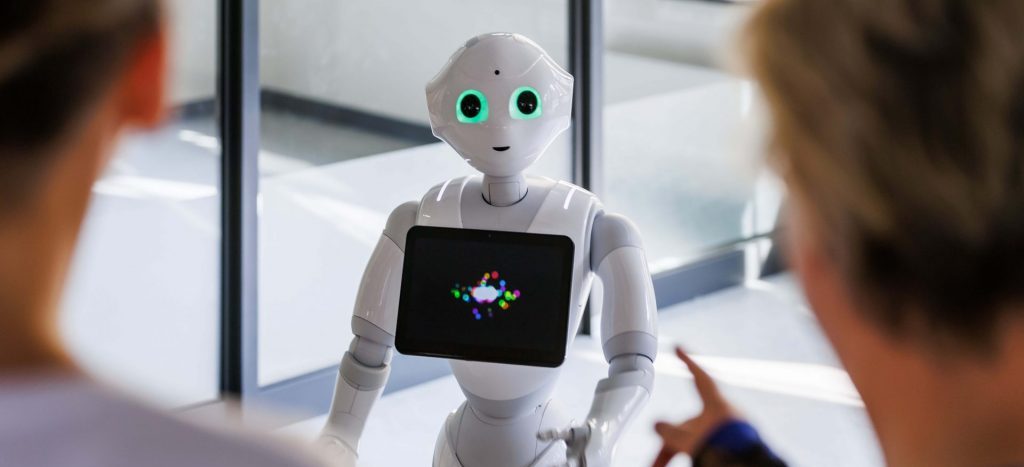

Michael Beetz (Universität Bremen), “Empowering Robots with Digital Mental Models: Filling the Cognitive Gap for Everyday Tasks”

21 March, 2024 @ 8:00 am - 5:00 pm

In this talk I introduce Digital Mental Models (DMMs) as a novel cognitive capability of AI-powered and cognition-enabled robots. By combining digital twin technology with symbolic knowledge representation and embodying this combination into robots, we tackle the challenge of converting vague task requests into specific robot actions, that is robot motions that cause desired physical effects and avoid unwanted side effects. This breakthrough enables robots to perform everyday manipulation tasks with an unprecedented level of context-sensitivity, foresight, generality, and transferability. DMMs narrow the cognitive divide currently existing in robotics by equipping robots with a profound understanding of the physical world and how it works.

This talk will take place in person at SCIoI.