- This event has passed.

POSTPONED: Scott Robbins, “What Machine’s Shouldn’t Do”

3 November, 2022 @ 10:00 am - 11:00 am

From writing essays to evaluating potential hires, machines are doing a lot these days. In all spheres of life, it seems that machines are being delegated more and more decisions. Some of these machines are being delegated decisions that could have significant impact on human lives. Examples of such machines which have caused such impact are widespread and include machines evaluating loan applications, machines evaluating criminals for sentencing, autonomous weapon systems, driverless cars, digital assistants, etc. Considering that machines cannot be held morally accountable for their actions (Bryson, 2010; Johnson, 2006; van Wynsberghe & Robbins, 2018), the question that governments, NGOs, academics, and the general public should be asking themselves is: how do we keep meaningful human control (MHC) over these machines?

The literature thus far details what features the machine or the context must have in order for MHC to be realized. Should humans be in the loop or on the loop? Should we force machines to be explainable? Lastly, should we endow machines with moral reasoning capabilities? (Ekelhof, 2019; Floridi et al., 2018; Robbins, 2019a, 2019b; Santoni de Sio & van den Hoven, 2018; Wendall Wallach & Allen, 2010; Wendell Wallach, 2007). Rather than look to the machine itself or what part humans have to play in the context, I argue here that we should shine the spotlight on the decisions that machines are being delegated. Meaningful human control, then, will be about controlling what decisions get made by machines.

I argue that keeping meaningful human control over machines (especially AI which relies on opaque methods) means restricting machines to decisions that do not require a justifying explanation and can, in principle, be proven efficacious. Because contemporary methodologies in AI are opaque, many machines cannot offer explanations for their outputs. In many cases, decisions require justifying explanations, and we should therefore not use machines for such cases. It won’t be surprising that machines should be efficacious if they are to be used – especially in contexts that will have impacts on human beings. Increasingly, however, machines are being delegated decisions for which we are unable, in principle, to evaluate their efficacy. This should not happen.

These arguments lead to the conclusion that machines should be restricted to descriptive outputs. It must always be a human being deciding how to employ evaluative terms as these terms not only refer to specific states of affairs but also say something about how the world ought to be. Machines which are able to make decisions based on opaque considerations should not be telling humans how the world ought to be. This is a breakdown of human control in the most severe way. Not only would we be losing control over specific decisions in specific contexts, but we would be losing control over what descriptive content grounds evaluative classifications.

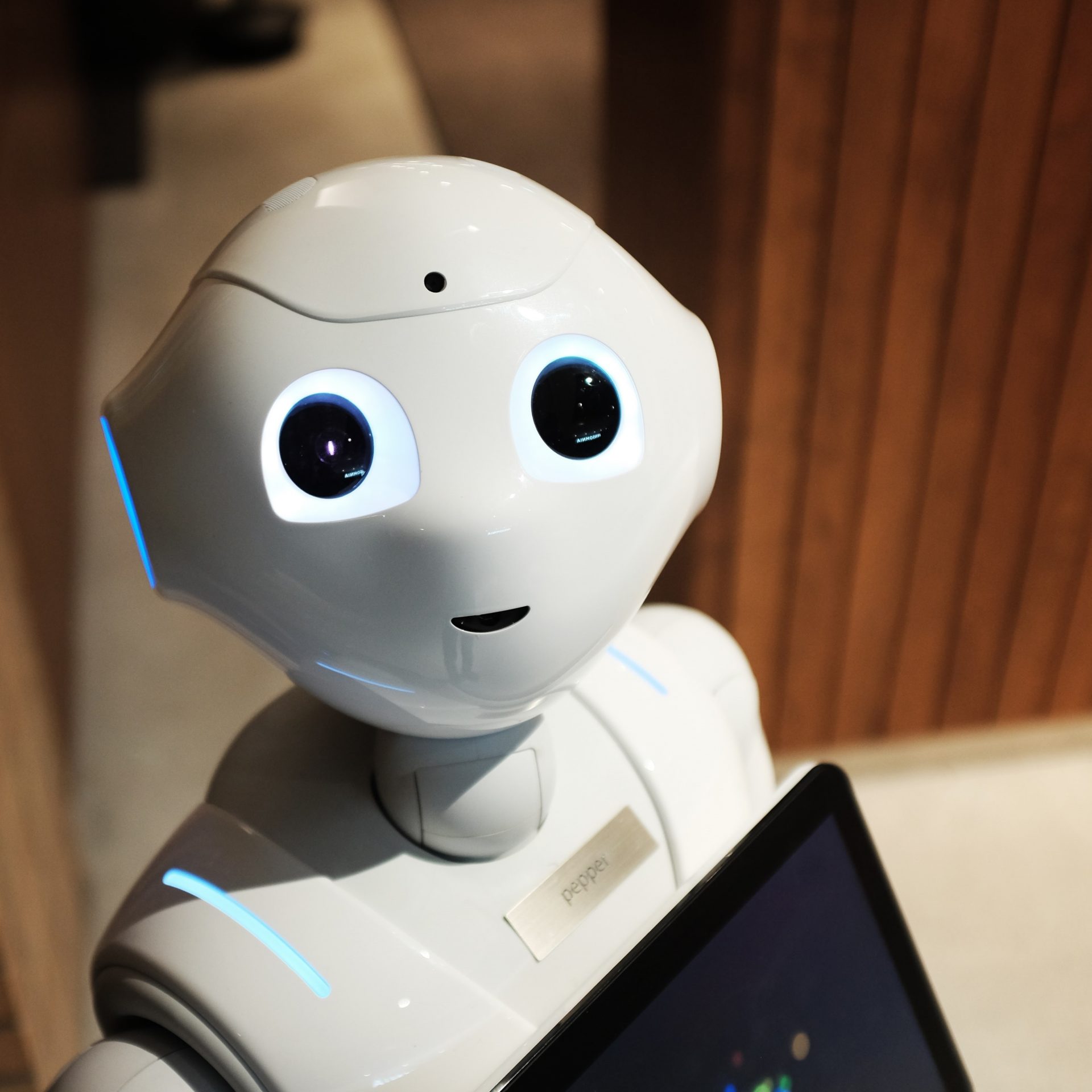

Photo by Alex Knight on Unsplash

This talk will take place in person at SCIoI.