Internal and External Visual Information Sampling Using Eye-Movements and Overt Attention in Dynamic Scenes

Visual Information Sampling

This project builds on the previous SCIoI project 01 in which we aimed at a better understanding of how humans explore the visual environment by moving their eyes to locations of interest and by extracting visual information during periods of fixation. To be of higher relevance for understanding intelligent behavior, we investigated ecologically valid settings, thus complementlng the highly reduced settings typically considered in psychology. This entailed (1) dynamic scenes, as active observers, due to their own movement, never have static images available, and (2) task-dependence, as information is often gathered for a purpose. For this, we built the SCIoI Visual Capture Lab that allowed us to bridge the gap between tightly controlled psychophysical setups and dynamic real-world scenes, recorded large- scale data sets consisting of videos and corresponding eye-movement patterns, and contributed to the integration platform, which allowed us to test our findings in real-world appli- cations. Analysing this data. we could already demonstrate, that object representations act as a critical mid-level link between bottom-up salience and top-down guidance.

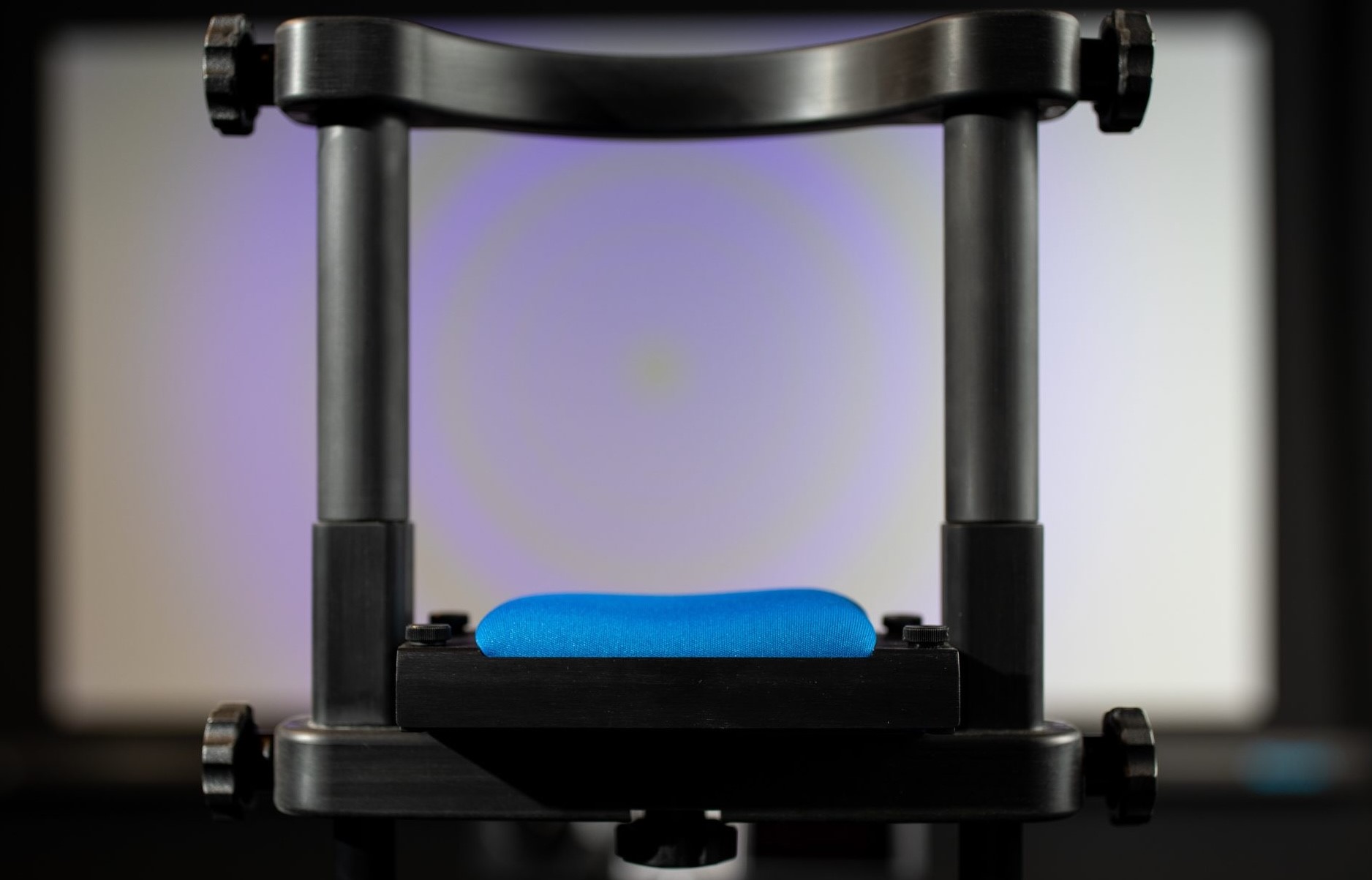

In this project, we want to capitalize on this infrastructure and the recorded data sets to refine our computational modelling framework for attentive visual information sampling. Specifically, we investigate inter-individual differences in gaze behavior in search for different information sampling strategies and the relationship between task, gaze behavior, and scene memorization, adding a working memory component to the current computational modelling framework. In continuation of the integration work performed in the current project 01, our ScanDy saccade prediction framework will be combined with a robotics motivated visual analysis of fixation locations to help solving the lock-box problem. Thus, results will have direct implications for the SCIoI example behavior 1 “escaping from an escape room”.